Today we’re going to be wrapping up this series on file uploads for the web. If you’ve been following along, you should now be familiar with enabling file uploads on the front end and the back end. We’ve covered architectural decisions to reduce cost on where we host our files and improve the delivery performance. So I thought we would wrap up the series today by covering security as it relates to file uploads.

In case you’d like to go back and revisit any earlier blogs in the series, here’s a list of what we’ve covered so far:

Introduction

Anytime I discuss the topic of security, I like to consult the experts at OWASP.org. Conveniently, they have a File Upload Cheat Sheet, which outlines several attack vectors related to file uploads and steps to mitigate them.

Today we’ll walk through this cheat sheet and how to implement some of their recommendations into an existing application.

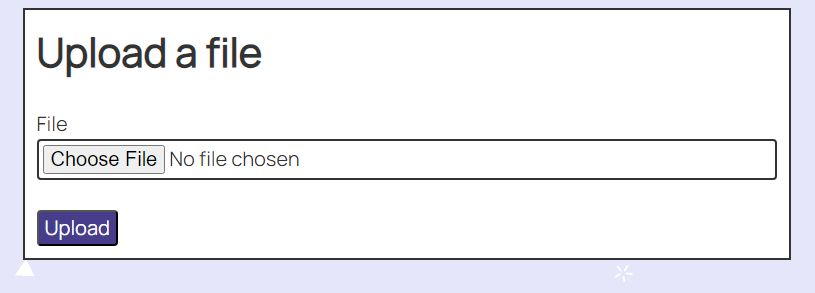

For a bit of background, the application has a frontend with a form that has a single file input that uploads that file to a backend.

The backend is powered by Nuxt.js‘ Event Handler API, which receives an incoming request as an “event” object, detects whether it’s a multipart/form-data request (always true for file uploads), and passes the underlying Node.js request object (or IncomingMessage) to this custom function called parseMultipartNodeRequest.

import formidable from 'formidable';

/* global defineEventHandler, getRequestHeaders, readBody */

/**

* @see https://nuxt.com/docs/guide/concepts/server-engine

* @see https://github.com/unjs/h3

*/

export default defineEventHandler(async (event) => {

let body;

const headers = getRequestHeaders(event);

if (headers['content-type']?.includes('multipart/form-data')) {

body = await parseMultipartNodeRequest(event.node.req);

} else {

body = await readBody(event);

}

console.log(body);

return { ok: true };

});

All the code we’ll be focusing on today will live within this parseMultipartNodeRequest function. And since it works with the Node.js primitives, everything we do should work in any Node environment, regardless of whether you’re using Nuxt or Next or any other sort of framework or library.

Inside parseMultipartNodeRequest we:

Create a new Promise

Instantiate a

multipart/form-dataparser using a library called formidableParse the incoming Node request object

The parser writes files to their storage location

The parser provides information about the fields and the files in the request

Once it’s done parsing, we resolve parseMultipartNodeRequest‘s Promise with the fields and files.

/**

* @param {import('http').IncomingMessage} req

*/

function parseMultipartNodeRequest(req) {

return new Promise((resolve, reject) => {

const form = formidable({

multiples: true,

});

form.parse(req, (error, fields, files) => {

if (error) {

reject(error);

return;

}

resolve({ ...fields, ...files });

});

});

}

That’s what we’re starting with today, but if you want a better understanding of the low-level concepts for handling multipart/form-data requests in Node, check out, “Handling File Uploads on the Backend in Node.js (& Nuxt).” It covers low level topics like chunks, streams, and buffers, then shows how to use a library instead of writing one from scratch.

Securing Uploads

With our app set up and running, we can start to implement some of the recommendations from OWASP’s cheat sheet.

Extension Validation

With this technique, we check the uploading file name extensions and only allow files with the allowed extension types into our system.

Fortunately, this is pretty easy to implement with formidable. When we initialize the library, we can pass a filter configuration option which should be a function that has access to a file object parameter that provides some details about the file, including the original file name. The function must return a boolean that tells formidable whether to allow writing it to the storage location or not.

const form = formidable({

// other config options

filter(file) {

// filter logic here

}

});

We could check file.originalFileName against a regular expression that tests whether a string ends with one of the allowed file extensions. For any upload that doesn’t pass the test, we can return false to tell formidable to skip that file and for everything else, we can return true to tell formidable to write the file to the system.

const form = formidable({

// other config options

filter(file) {

const originalFilename = file.originalFilename ?? '';

// Enforce file ends with allowed extension

const allowedExtensions = /\.(jpe?g|png|gif|avif|webp|svg|txt)$/i;

if (!allowedExtensions.test(originalFilename)) {

return false;

}

return true;

}

});

Filename Sanitization

Filename sanitization is a good way to protect against file names that may be too long or include characters that are not acceptable for the operating system.

The recommendation is to generate a new filename for any upload. Some options may be a random string generator, a UUID, or some sort of hash.

Once again, formidable makes this easy for us by providing a filename configuration option. And once again it should be a function that provides details about the file, but this time it expects a string.

const form = formidable({

// other config options

filename(file) {

// return some random string

},

});

We can actually skip this step because formidable’s default behavior is to generate a random hash for every upload. So we’re already following best practices just by using the default settings.

Upload and Download Limits

Next, we’ll tackle upload limits. This protects our application from running out of storage, limits how much we pay for storage, and limits how much data could be transferred if those files get downloaded, which may also affect how much we have to pay.

Once again, we get some basic protection just by using formidable because it sets a default value of 200 megabytes as the maximum file upload size.

If we want, we could override that value with a custom maxFileSize configuration option. For example, we could set it to 10 megabytes like this:

const form = formidable({

// other config options

maxFileSize: 1024 * 1024 * 10,

});

The right value to choose is highly subjective based on your application needs. For example, an application that accepts high-definition video files will need a much higher limit than one that expects only PDFs.

You’ll want to choose the lowest conservative value without being so low that it hinders normal users.

File Storage Location

It’s important to be intentional about where uploaded files get stored. The top recommendation is to store uploaded files in a completely different location than where your application server is running.

That way, if malware does get into the system, it will still be quarantined without access to the running application. This can prevent access to sensitive user information, environment variables, and more.

In one of my previous posts, “Stream File Uploads to S3 Object Storage and Reduce Costs,” I showed how to stream file uploads to an object storage provider. So it’s not only more cost-effective, but it’s also more secure.

But if storing files on a different host isn’t an option, the next best thing we can do is make sure that uploaded files do not end up in the root folder on the application server.

Again, formidable handles this by default. It stores any uploaded files in the operating system’s temp folder. That’s good for security, but if you want to access those files later on, the temp folder is probably not the best place to store them.

Fortunately, there’s another formidable configuration setting called uploadDir to explicitly set the upload location. It can be either a relative path or an absolute path.

So, for example, I may want to store files in a folder called “/uploads” inside my project folder. This folder must already exist, and if I want to use a relative path, it must be relative to the application runtime (usually the project root). That being the case, I can set the config option like this:

const form = formidable({

// other config options

uploadDir: './uploads',

});

Content-Type Validation

Content-Type validation is important to ensure that the uploaded files match a given list of allowed MIME-types. It’s similar to extension validation, but it’s important to also check a file’s MIME-type because it’s easy for an attacker to simply rename a file to include a file extension that’s in our allowed list.

Looking back at formidable’s filter function, we’ll see that it also provides us with the file’s MIME-type. So we could add some logic enforces the file MIME-type matches our allow list.

We could modify our old function to also filter out any upload that is not an image.

const form = formidable({

// other config options

filter(file) {

const originalFilename = file.originalFilename ?? '';

// Enforce file ends with allowed extension

const allowedExtensions = /\.(jpe?g|png|gif|avif|webp|svg|txt)$/i;

if (!allowedExtensions.test(originalFilename)) {

return false;

}

const mimetype = file.mimetype ?? '';

// Enforce file uses allowed mimetype

return Boolean(mimetype && (mimetype.includes('image')));

}

});

Now, this would be great in theory, but the reality is that formidable actually generates the file’s MIME-type information based on the file extension.

That makes it no more useful than our extension validation. It’s unfortunate, but it also makes sense and is likely to remain the case.

formidable’s filter function is designed to prevent files from being written to disk. It runs as it’s parsing uploads. But the only reliable way to know a file’s MIME-type is by checking the file’s contents. And you can only do that after the file has already been written to the disk.

So we technically haven’t solved this issue yet, but checking file contents actually brings us to the next issue, file content validation.

Intermission

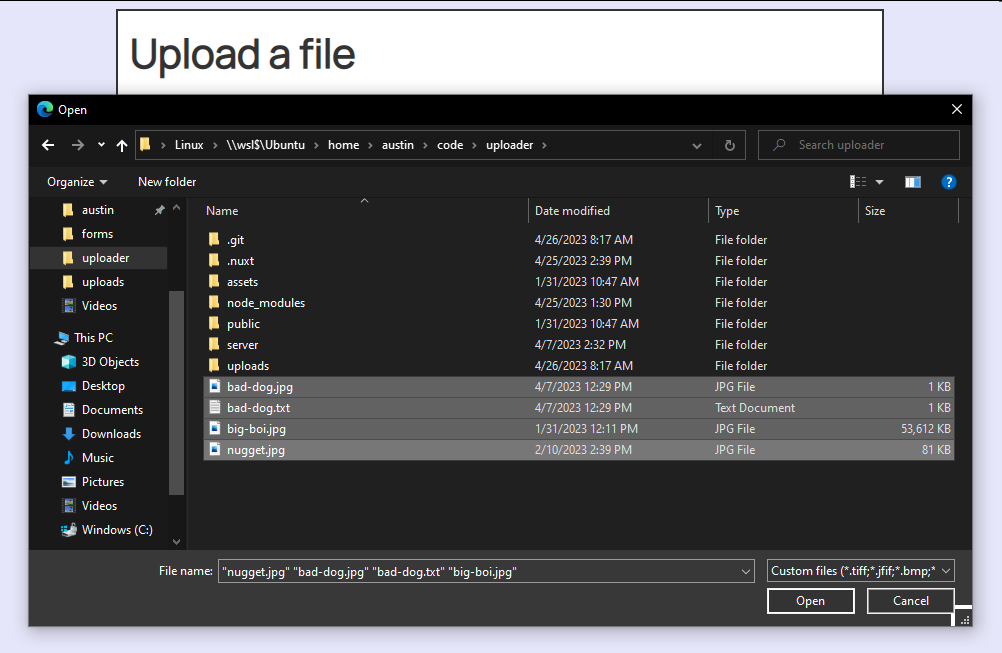

Before we get into that, let’s check the current functionality. I can upload several files, including three JPEGs and one text file (note that one of the JPEGs is quite large).

When I upload this list of files, I’ll get a failed request with a status code of 500. The server console reports the error is because the maximum allowed file size was exceeded.

![Server console reporting the error, "[nuxt] [request error] [unhandled] [500] options.maxFileSize (10485760 bytes) exceeded, received 10490143 bytes of file data"](https://austingil.com/wp-content/uploads/image-81-1080x374.png)

This is good.

We’ve prevented a file from being uploaded into our system that exceeds the maximum file limit size (we should probably do a better job of handling errors on the backend, but that’s a job for another day).

Now, what happens when we upload all those files except the big one?

No error.

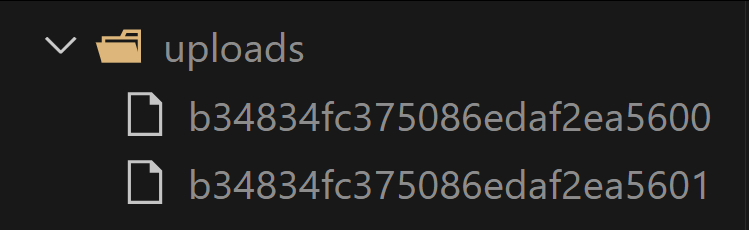

And looking in the “uploads” folder, we’ll see that despite uploading three files, only two were saved. The .txt file did not get past our file extension filter.

We’ll also notice that the names of the two saved files are random hash values. Once again, that’s thanks to formidable default behavior.

Now there’s just one problem. One of those two successful uploads came from the “bad-dog.jpeg” file I selected. That file was actually a copy of the “bad-dog.txt” that I renamed. And THAT file actually contains malware 😱😱😱

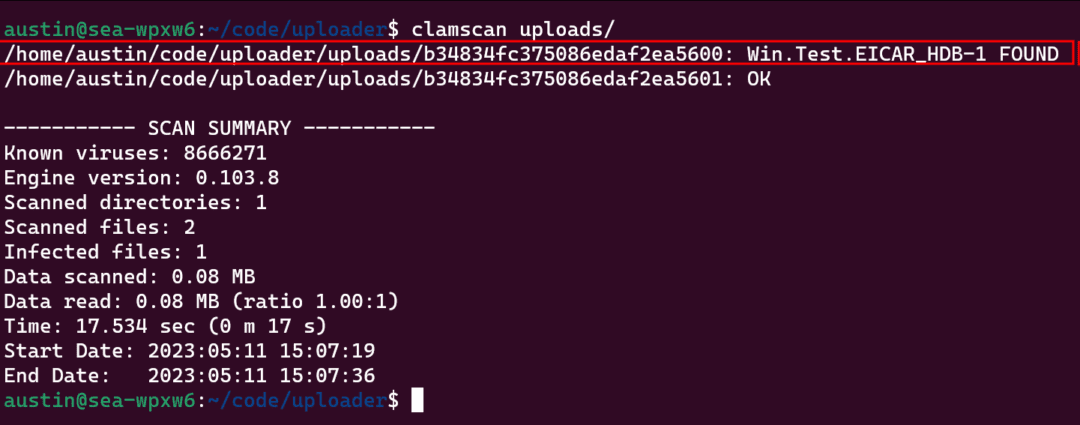

We can prove it by running one of the most popular Linux antivirus tools on the uploads folder, ClamScan. Yes, ClamScan is a real thing. Yes, that’s its real name. No, I don’t know why they called it that. Yes, I know what it sounds like.

(Side note: The file I used was created for testing malware software. So it’s harmless, but it’s designed to trigger malware scanners. But that meant I had to get around browser warnings, virus scanner warnings, firewall blockers, AND angry emails from our IT department just to get a copy. So you better learn something.)

OK, now let’s talk about file content validation.

File Content Validation

File content validation is a fancy way of saying, “scan the file for malware”, and it’s one of the more important security steps you can take when accepting file uploads.

We used ClamScan above, so now you might be thinking, “Aha, why don’t I just scan the files as formidable parses them?”

Similar to MIME-type checking, malware scanning can only happen after the file has already been written to disc. Additionally, scanning file contents can take a long time. Far longer than is appropriate in a request-response cycle. You wouldn’t want to keep the user waiting that long.

So we have two potential problems:

By the time we can start scanning a file for malware, it’s already on our server.

We can’t wait for scans to finish before responding to user’s upload requests.

Bummer…

Malware Scanning Architecture

Running a malware scan on every single upload request is probably not an option, but there are solutions. Remember that the goal is to protect our application from malicious uploads as well as to protect our users from malicious downloads.

Instead of scanning uploads during the request-response cycle, we could accept all uploaded files, store them in a safe location, and add a record in a database containing the file’s metadata, storage location, and a flag to track whether the file has been scanned.

Next, we could schedule a background process that locates and scans all the records in the database for unscanned files. If it finds any malware, it could remove it, quarantine it, and/or notify us. For all the clean files, it can update their respective database records to mark them as scanned.

Lastly, there are considerations to make for the front end. We’ll likely want to show any previously uploaded files, but we have to be careful about providing access to potentially dangerous ones. Here are a couple different options:

After an upload, only show the file information to the user that uploaded it, letting them know that it won’t be available to others until after it’s been scanned. You may even email them when it’s complete.

After an upload, show the file to every user, but do not provide a way to download the file until after it has been scanned. Include some messaging to tell users the file is pending a scan, but they can still see the file’s metadata.

Which option is right for you really depends on your application use case. And of course, these examples assume your application already has a database and the ability to schedule background tasks.

It’s also worth mentioning here that one of the OWASP recommendations was to limit file upload capabilities to authenticated users. This makes it easier to track and prevent abuse.

Unfortunately, databases, user accounts, and background tasks all require more time than I have to cover in today’s article, but I hope these concepts give you more ideas on how you can improve your upload security methods.

Block Malware at the Edge

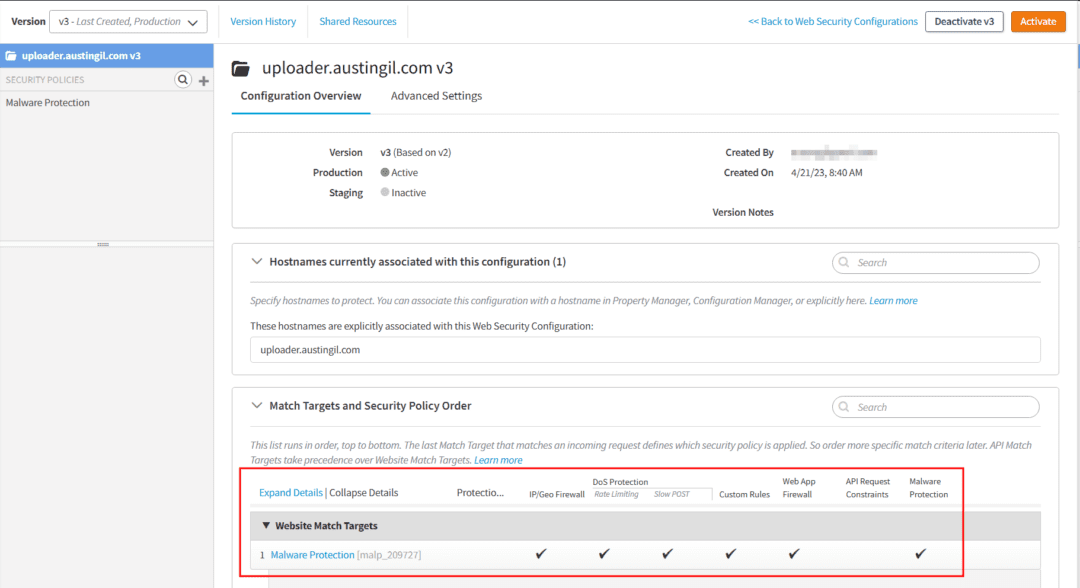

Before we finish up today, there’s one more thing that I want to mention. If you’re an Akamai customer, you actually have access to a malware protection feature as part of the web application firewall products. I got to play around with it briefly and want to show it off because it’s super cool.

I have an application up and running at uploader.austingil.com. It’s already integrated with Akamai’s Ion CDN, so it was easy to also set it up with a security configuration that includes IP/Geo Firewall, Denial of Service protection, WAF, and Malware Protection.

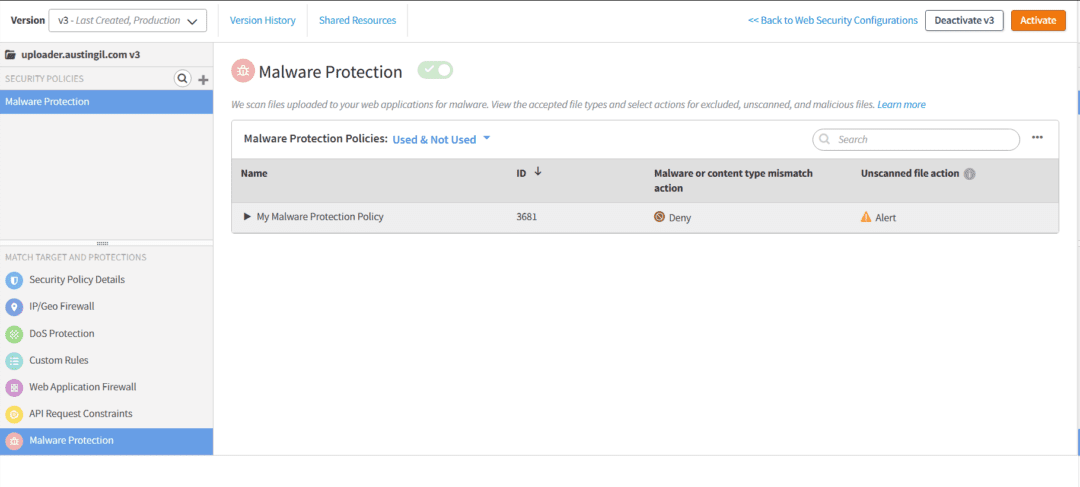

I configured the Malware Protection policy to just deny any request containing malware or a content type mismatch.

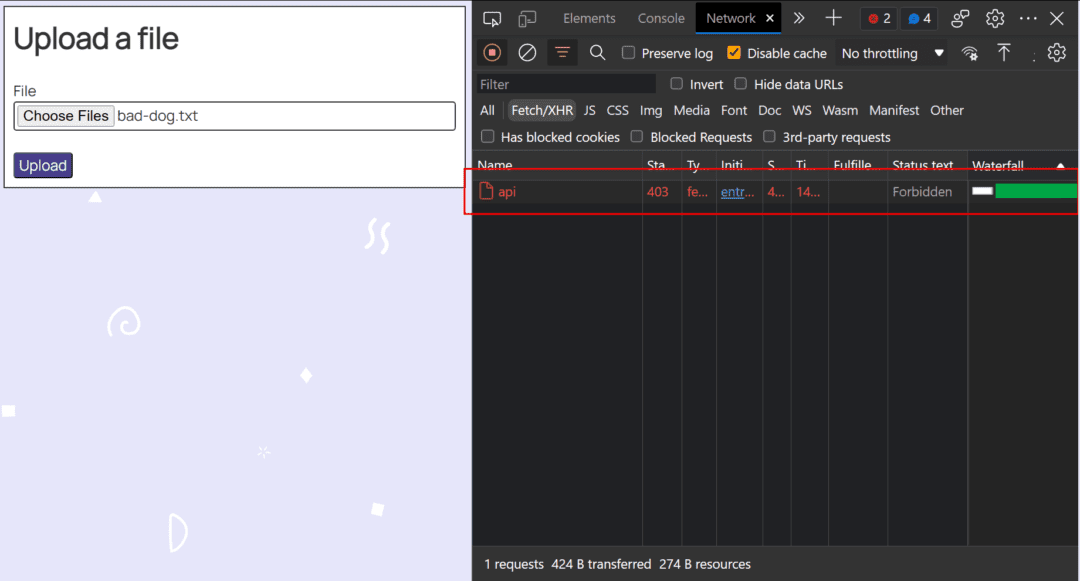

Now, if I go to my application and try to upload a file that has known malware, I’ll see almost immediately the response is rejected with a 403 status code.

To be clear, that’s logic I didn’t actually write into my application. That’s happening thanks to Akamai’s malware protection, and I really like this product for a number of reasons.

It’s convenient and easy to set up and modify from within the Akamai UI.

I love that I don’t have to modify my application to integrate the product.

It does its job well and I don’t have to manage maintenance on it.

Last, but not least, the files are scanned on Akamai’s edge servers, which means it’s not only faster, but it also keeps blocked malware from ever even reaching my servers. This is probably my favorite feature.

Due to time and resource restrictions, I think Malware Protection can only scan files up to a certain size, so it won’t work for everything, but it’s a great addition for blocking some files from even getting into your system.

Closing Thoughts

It’s important to remember that there is no one-and-done solution when it comes to security. Each of the steps we covered have their own pros and cons, and it’s generally a good idea to add multiple layers of security to your application.

Okay, that’s going to wrap up this series on file uploads for the web. If you haven’t yet, consider reading some of the other articles.

Please let me know if you found this useful, or if you have ideas on other series you’d like me to cover. I’d love to hear from you.

Thank you so much for reading. If you liked this article, and want to support me, the best ways to do so are to share it, sign up for my newsletter, and follow me on Twitter.

Originally published on austingil.com.